What is Bayesian belief? (Part 1)

If someone says, “I believe in science”, what do you think of this statement? Initially, I found it funny because science is not something we believe, but something we pursue for the understanding of the natural world based on evidence, mainly through observation, right? But I am starting to wonder, if what I am trying to understand is based on evidence, don’t I need enough evidence to convince myself or believe firmly about what I am trying to understand?

For example, if you are a prosecutor who needs to convince jurors that the accused is guilty of a crime, you need to bring enough evidence (beyond a reasonable doubt in U.S.) that leads to conviction or strong belief that a charge (indictment) is right.

Then, the question is, how much evidence (observation) do I need so that I can confidently say that my initial guess (aka, hypothesis) is turned out to be true or close to the truth?”. Can I quantify the notion of belief based on evidence?

By the way, “I believe in science” was a line from a film, Nacho Libre, where a character named Esqueleto comically tells a Catholic friar, Nacho that he does not believe in God. The reason I have brought this movie quote here is that when I heard this quote from the film, it reminded me of an English Presbyterian minister who struggled the notion of belief based on evidence. His name is Thomas Bayes, and as you have guessed, the title of this post is a reference to his famous theorem (aka, Bayes’ rule) which provides a theoretical basis for Bayesian inference and many machine learning (aka, Artificial Intelligence) techniques.

As a side note, people believe he has come up with this rule in order to rebut a famous Scottish philosopher, David Hume’s argument against believing in biblical miracles on the evidence of testimony. If this is true, it is interesting that our modern AI techniques were derived from a rule that is designed to support biblical miracles.

In a nutshell, Bayes’ Theorem, or concretely Bayesian inference describes a mathematical way of updating belief (probability for a hypothesis) as more evidence (observation) becomes available. To understand Bayes’ rule, I am going to introduce the notions of likelihood, prior and posterior knowledge.

Let me use a simple example of tossing a coin to understand likelihood without math notations first. If someone asks, what is the probability of getting a HEADs?, The typical answer is 50% based on the idea that the coin is fair. But in reality, the coin being fair cannot be determined. Why? Because we don’t know for sure until we toss the same coin repeatedly and see the results. We need observations in order to strongly believe or prove our hypothesis, “fair coin”. Therefore, we can ask a different question to understand about the coin. How likely is the coin being fair after observing the result of my tossing?

For example, if the first attempt shows a HEADs, how likely is the coin being fair? I would say “not sure or unlikely” since I have only observed one evidence that indicates a HEADs. How about HEADs, TAILs, HEADs and TAILs when the same coin are tossed in 4 consecutive trials? I would say more likely than the previous case but still not sure that the coin is fair. The number of observations is not enough for me to confirm my hypothesis. If I continue to increase the number of trials such as 100, and received 51 HEADs and 49 TAILs, then I can somewhat confidently say that the coin is fair. As I gather more evidence about the coin through tossing, I can be more certain about whether my hypothesis is true or not. In other words, the likelihood of the coin being fair (or not being fair) become more apparent when more observations are presented and consequently, our previous belief (hypothesis) becomes enforced or weakened.

Then, how can I measure likelihood ? Before answering this question, let me review some basic probability concepts. I am going to start by describing 1) marginal probability, 2) conditional probability, 3) joint probability, 4) marginalization*, 5) independent observations assumption and random sampling.

The following sections are going to be a little bit lengthy but be patient with me. I will do my best to make these topics as informative as possible. My intention here is to cover enough information to help you to build up necessary concepts to answer the question: how can I measure likelihood in order to validate my hypothesis?

Basic Probability Concepts

Let’s review some basic concepts with an example. I have 5 balls in a box, 2 of which are red (RED) and the remaining 3 balls are blue (BLUE). Each ball has either a triangle (TRI) or rectangle (RECT) shape on the surface of the ball. There are 3 TRIs and 2 RECTs.

In addition, each ball also has either a sun (SUN) or moon (MOON) object on the surface of the ball. There are 3 SUNs and 2 MOONs. To make things interesting, 2 RED balls and 1 BLUE ball have TRI on the surface of the ball, and 2 BLUE balls have RECT. Also, there are 1 RED ball and 2 BLUE balls with SUN on the surface of the ball whereas 1 RED ball and 1 BLUE ball has MOON as shown in Figure 1. Similarly, I can also say that 2 RECT balls and 1 TRI ball are BLUE balls, and 2 TRI balls are RED balls and so on and so forth.

These 5 balls can be classified by Color (RED or BLUE) , Shape (RECT or TRI) or Object (SUN or MOON).

I am going to draw a ball out of the box. Keep in mind that I can determine underlaying probabilities by looking at the box. What is the probability of getting a RED ball? There are 2 RED balls out of 5 balls. How about getting a BLUE ball? There are 3 BLUE balls. In this case, I have a higher chance of getting a BLUE ball.

P(Color = RED) = 2/5 = 0.4

P(Color = BLUE) = 3/5 = 0.6

Similarly, what is the probability of getting a TRI ball? How about getting a RECT ball?

P(Shape=TRI) = 3/5 = 0.6

P(Shape=RECT) = 2/5 = 0.4

Finally, I can also find the probability of getting a SUN ball or MOON ball.

P(Object=SUN) = 3/5 = 0.6

P(Object=MOON) = 2/5 = 0.4

From now on, I am going to call them marginal probabilities.

Let’s look at a different scenario. What is the probability of getting a BLUE ball among TRI balls? In this case, I am going to find all TRI balls and then, find a BLUE ball among TRI balls.

P(Color = BLUE | Shape = TRI) = 1/3 = 0.33

You may ask, what do you mean by this formula, P(Color=BLUE | Shape=TRI)? First, I can safely ignore balls with RECT on their surfaces since I am interested in TRI balls as shown in Figure 2. This condition symbol (often called, given), “ | Shape=TRI ” simply means that I only need to pay attention to TRI balls. Then, I am going to find a BLUE ball among these 3 TRI balls. This is called, conditional probability.

But what I am really interested in is the probability of getting a BLUE ball that has a TRI shape on it. What I am looking for here is an intersection between two groups, Color and Shape. The correct formulation is shown below.

P(Color=BLUE and Shape=TRI) = P(Color=BLUE, Shape=TRI) = 1/5

Does this make sense? Let’s count them. There are 1 BLUE ball with TRI out of 5 balls or 1/5. This is called, joint probability.

In fact, the joint probability can be expressed as a product of conditional probability and marginal probability as shown below:

P(Color=BLUE, Shape=TRI) = P(Color = BLUE | Shape = TRI) * P(Shape=TRI)

= 1/3 * 3/5 = 1/5

Interestingly, you can find the same joint probability from a different angle. Instead of finding a BLUE ball among TRI balls, I can also find a TRI ball among BLUE balls. What I mean is that you can find the same probability by getting a TRI ball among BLUE balls.

P(Shape=TRI, Color=BLUE) = 1/5

or

P(Shape=TRI | Color=BLUE)*P(Color=BLUE) = 1/3 * 3/5 = 1/5

In fact, both joint probabilities are equivalent, and you will find it later that this is the basis for Bayes’ rule.

I have another observation about marginal probability. As shown in Figure 2, the marginal probability of getting a TRI ball can be thought of the sum of two joint probabilities as shown below. This is known as marginalization*. In this example, I can find P(Shape=TRI) by adding two joint probabilities.

P(Shape=TRI) = P(Shape=TRI, Color=RED) + P(Shape=TRI, Color=BLUE)

I think marginalization is an important concept that requires a special attention since it appears in many Bayesian inference cases. Approximating this value is sometimes necessary when marginalization is not computationally feasible.

Let’s solve one more joint probability problem. What is the probability of getting a SUN ball that has RECT on its surface and its color is BLUE? If you look at Figure 1, you can easily spot one ball with these 3 characteristics.

P(Object=SUN, Shape=RECT, Color=BLUE) = 1/5

But we can also look this as a product of conditional probability and marginal probability. Since there are two BLUE balls with RECT (2/5), and only one of them (1/2) has SUN, we can formulate as follows:

= P(Object=SUN | Shape=RECT, Color=BLUE) * P(Shape=RECT, Color=BLUE)

= 1/2 * 2/5 = 1/5

You can further break down as:

= P(Object=SUN | Shape=RECT, Color=BLUE) * P(Shape=RECT | Color=BLUE) * P(Color=BLUE)

= 1/2 * 2/3 * 3/5 = 1/5

We are now done with basic probability concepts! The next topic I want to introduce is Bayes’ rule.

Bayes’ Rule

Previously, I have discussed a box with 5 colored balls that has either TRI or RECT shapes. And you can see that there is an intersection between them, which is a BLUE ball with a TRI shape on its surface. In this example, I am going to ignore objects (SUN and MOON).

Bayes’ rule says that the joint probability of getting a BLUE ball with TRI, is equal to the joint probability of getting a TRI ball with BLUE.

This is obvious, right? but the beauty of this formulation is that I can now express the joint probability as conditional probability times marginal probability as shown below:

Then, I can now say, the conditional probability of getting a BLUE ball given that it has a TRI shape is equivalent to the joint probability of getting a TRI and BLUE ball divided by the marginal probability of getting a TRI ball.

or

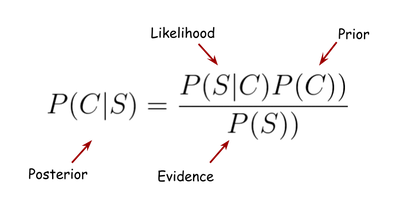

In this formulation, P(S|C) is often referred to as “likelihood”, P(C) as “prior”, P(S) as “evidence” and P(C|S) as “posterior”. I will go over each of these components in Part 2. Let me conclude this part by introducing the idea of “independent observations assumption”.

Independent Observations Assumption

What is the difference between P(Color=BLUE | Shape = TRI) and P(Color=BLUE | Shape = Any)? The first one has a condition where the shape of the balls I am interested in is TRI whereas the latter does not matter whether the ball has TRI or RECT. So, for the latter case, I am not setting any restriction or condition for the ball I am trying to get. Therefore, I can also say that

P(Color=BLUE) = P(Color=BLUE | Shape=Any)*P(Shape=Any)

3/5 = (3/5) * 1

In this case, each color is not dependent on shape. There is no joint probability since Color and Shape are independent to each other.

P(Color) = P(Color | Shape)

or

P(Shape) = P(Shape | Color)

Let me give you another example to make this concept concrete. Let’s ignore the shape for now. I am going to draw a ball and shortly after, another ball. What is the probability of getting a BLUE ball and then a RED ball regardless of its shape? I can simply multiply the probability of getting a BLUE ball with that of getting a RED ball as shown below because every single BLUE ball can form a pair with every single RED ball, right? Also, keep in mind that the order of drawing, BLUE and then RED, matters in this example.

P(Color=BLUE) = 3/5

P(Color=RED) = 2/5

P(Color=BLUE) * P(Color=RED) = (3/5) * (2/5) = 6/25 = 0.24

What I did was to randomly draw 2 samples, a BLUE ball and then, a RED ball out of the box. With 2 samples, I can come up with all possible pairs. Let’s count all possible pairs (2 samples) to verify this.

There are { B¹, B², B³, R¹, R² } where B represents a BLUE ball, and R represents a RED ball. The superscript indicates a specific ball in the box.

Let’s find all possible pairs: { B¹B², B¹B³, B²B¹, B²B³, B³B¹, B³B², R¹R², R²R¹, B¹R¹, B¹R², B²R¹, B²R², B³R¹, B³R², R¹B¹, R¹B², R¹B³, R²B¹, R²B², R²B³ } which has a length of 20. Specifically, the probability of getting a BLUE ball and then, a RED ball (BR) is 6/20.

Length of { B¹R¹, B¹R², B²R¹, B²R², B³R¹, B³R² } /20 = 6/20 = 0.3

Something went wrong. When I calculated the probability of getting a RED ball and BLUE ball, I received 6/25, but when I manually counted them, the result was 6/20.

What was the problem? Actually, I forgot to include additional 5 pairs: { B¹B¹, B²B², B³B³, R¹R¹, R²R² } to the set of all possible events, but the question is, do I need to include them to the set? Actually, it makes no sense to include these pairs. Here is why. If I have chosen B¹, there is no way I can get another B¹ from the box. I have to choose a different ball. In other words, once one of the BLUE balls is taken out of the box, the underlying probability of getting a BLUE ball changes. After the first draw, I have now 2 BLUE balls and 2 RED balls left in the box; therefore, the probability of getting a BLUE ball after the first draw is P(Color=BLUE) = 2/4, and it is the same for the probability of getting a RED ball: P(Color=RED) = 2/4. So the correct formulation should be:

P(Color=BLUE) * P¹(Color=RED) = (3/5) * (2/4) = 6/20

In fact, our initial formulation: P(Color=BLUE) * P(Color=RED) = 6/25, was based on the assumption that I am going to put a BLUE ball (first draw) back to the box before drawing a next ball (second draw). This is called drawing with replacement or independent observations assumption. In other words, each draw is going to be independent from each other, which makes things simpler as we delve into the main topic. In fact, it is okay to assume independent observations when the number of samples is large.

In Part 2, I will discuss the random sampling, Bernoulli trial, and different components of Bayes’ rule in order to find out how to measure likelihood and how the likelihood of my hypothesis (guess) become more apparent as more observations are presented. Consequently, my previous belief (hypothesis) becomes enforced or weakened based on observations.

Comments

Post a Comment